It's actually 5 less problems. But that wouldn't have made a catchy title.

The DiscrimiNAT Firewall now integrates with AWS' Gateway Load Balancer. Deploying this version alleviates five distinct problems.

- Exfiltration and Command & Control TTPs

- Discovery and maintenance of outbound FQDNs' allowlists

- High Availability

- Load Balancing

- Auto Scaling

Read on for more details.

Things Have Changed

Log4j, SolarWinds and Codecov have shown us that having high quality exfiltration controls are more important than ever.

And nonetheless,

➟ Two-pizza product teams want to move faster than ever and independently.

➟ SREs have SLOs to meet and their managers have SLAs to meet.

➟ Developers will only work with nimble tools that fit with their methodology, such as Terraform.

➟ Deployment times are in minutes and optimised to the second.

➟ Each deployment has become an unmapped, and unrestrained, mesh of microservices and exotic CDNs.

As security engineers would happily tell you, the controls to defend against these, and similar vulnerabilities have existed for some time:

🇺🇸 NIST SP 800-53, AC-4 Information Flow Enforcement

🇺🇸 NIST SP 800-53, SC-7 Boundary Protection

💳 PCI DSS v4.0, 1.3.2 Outbound traffic from the CDE is restricted

But these controls couldn't have been imposed easily on engineering squads because the available solutions were simply not up to scratch.

⨯ Allowlists for genuine outbound traffic were cumbersome to work out.

⨯ Shared allowlists, which contain CDNs with wildcarded base domains, defeated the purpose.

⨯ Allowlists managed centrally by 'security' were a point of friction.

⨯ 'Appliances' were incredibly complicated to bootstrap with XML payloads.

⨯ Some offered no protection against even simple evasion techniques such as DNS spoofing. (Have you seen our litmus test?)

⨯ Others were susceptible to false-positives, such as in the case of FWAAS-1501.

⨯ Apps & microservices' code would need to be changed to work with explicit proxies, and configurations maintained for dev and prod environments.

They were a recipe for non-adoption.

Until now.

Enter DiscrimiNAT with AWS' GWLB

The remaining 94 problems are not to do with DiscrimiNAT.

Over the last few months we've collaborated with AWS to integrate DiscrimiNAT with the Gateway Load Balancer, in the two-arm mode, that delivers,

✓ High Availability, Load Balancing, Auto Scaling

✓ Zero-Downtime Upgrades

✓ Reduced number of hops, hence low latency

✓ Source NAT to the internet in the same hop

✓ Simple, two-subnet design

We're glad to see that AWS has completely thought this through!

➟ A GWLB Endpoint is set as the target for destination 0.0.0.0/0 in the Private subnets' routing tables. This Endpoint does not change.

➟ The Endpoint encapsulates the original packets, in Geneve, from the apps and delivers them to one of DiscrimiNAT Firewall instances in the associated Auto Scaling Group.

➟ Flow stickiness is maintained with the 5-tuple of each packet, i.e. protocol, source IP, source port, destination IP, destination port.

➟ The load balancer health checks each firewall instance at regular intervals.

➟ Should an instance fail the health check, traffic is diverted away from it to healthy instances and the Auto Scaling Group begins its replacement process.

➟ With routing applied to the original packet (decapsulated), Source NAT is achieved within the firewall instances.

Two Reference Architectures

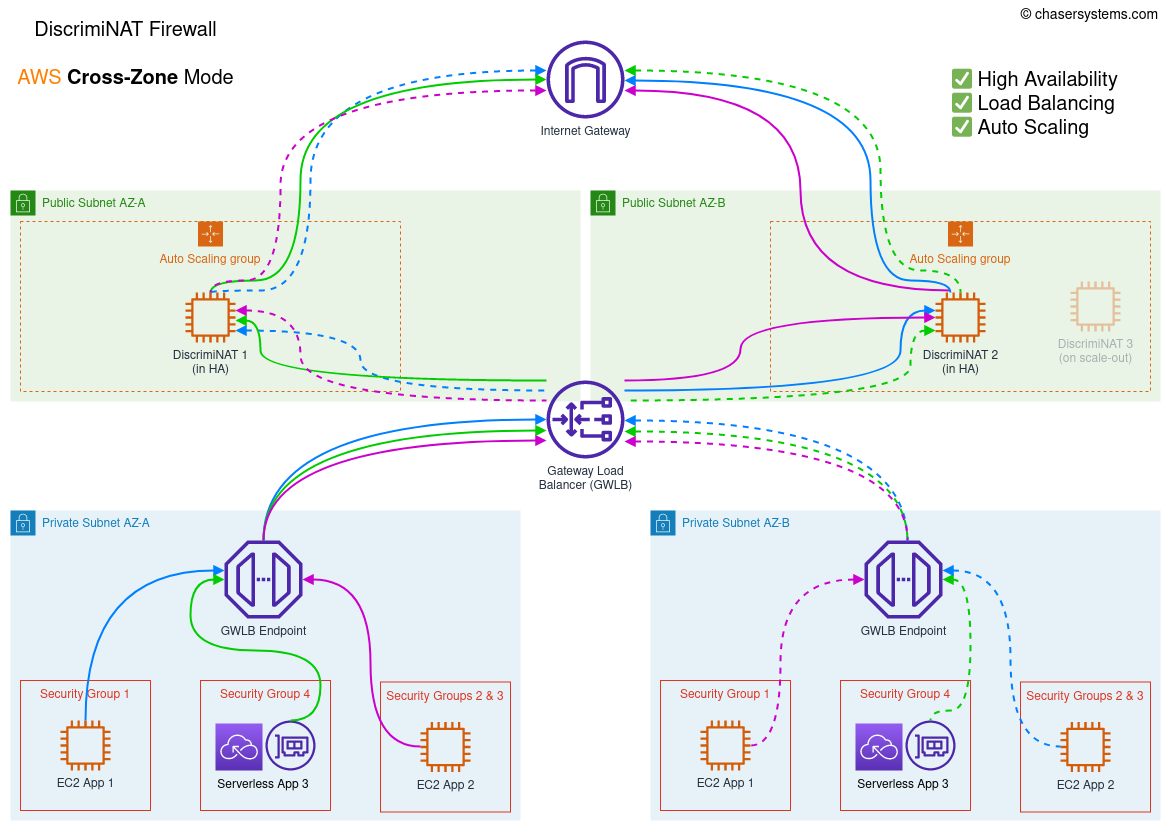

Cross-Zone

In the cross-zone mode, the GWLB distributes traffic evenly across all deployed AZs. This reduces the number of DiscrimiNAT Firewall instances that will have to run for high-availability but increases AWS' data-transfer costs and a bit of latency.

Terraform variable high_availability_mode should be set to cross-zone to implement this. This is also the default. The Terraform module is here.

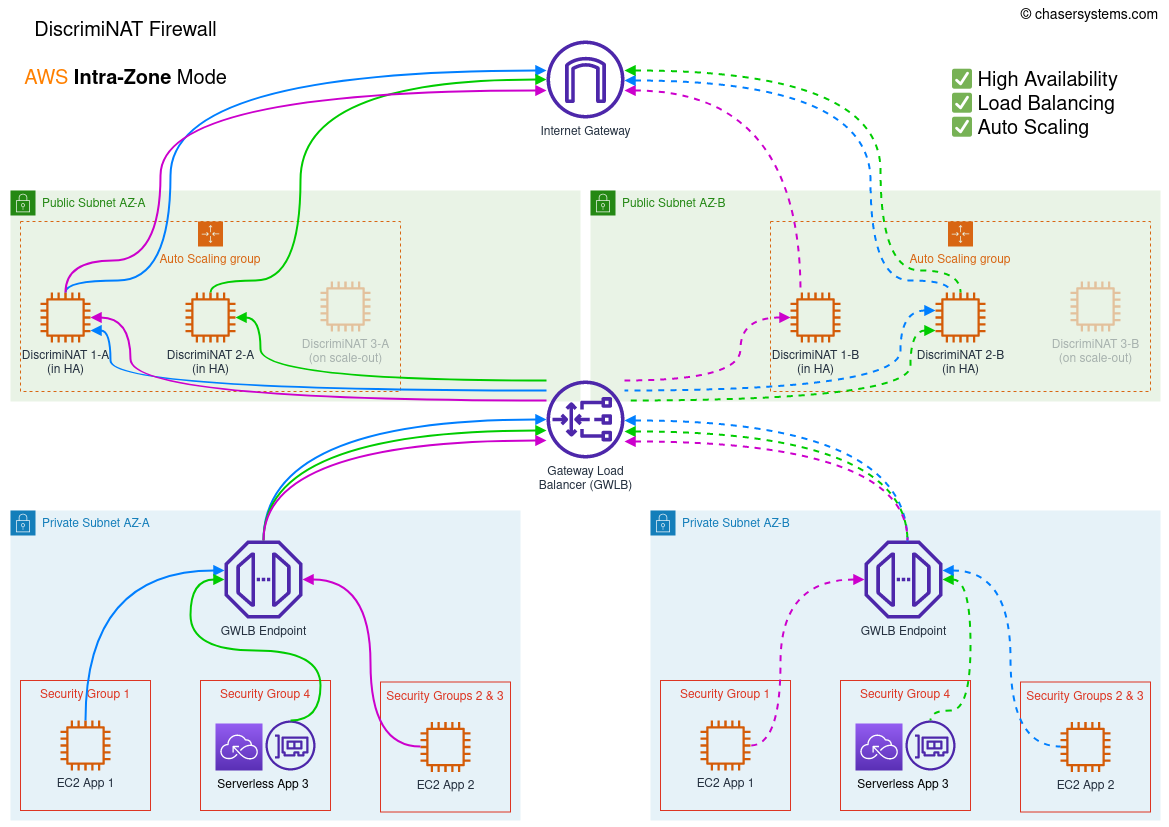

Intra-Zone

In the intra-zone mode, the GWLB distributes traffic evenly across all DiscrimiNAT Firewall instances in the same AZ as the client. For effective high-availability, this mode will need at least two instances per deployed AZ. Please note this does not fully protect you against the failure of an entire AZ on the Amazon side, however your other services in the zone would potentially be impacted too and therefore not sending egress traffic.

Terraform variable high_availability_mode should be set to intra-zone. The Terraform module is here.

Both architectures are discussed in our reference documentation here.

This is DevSecOps

The trinity of ‘developer experience + security standards + operational efficiency’ is greater than the sum of its parts.

We call it ‘ergonomic cybersecurity’.

For the Dev

✓ Figure out FQDNs to be allowed with clever annotation in the Security Groups directly.

✓ Ready-made queries for CloudWatch to tabulate the observed FQDNs in normal traffic.

✓ Add allowed FQDNs to existing Security Groups as annotations directly.

✓ Completely Terraform-driven (or any other IaC) with just the AWS provider.

✓ Never too late to implement PCI-DSS or NIST SP 800 controls.

✓ Simple, two-subnet design.

✓ Free, expert support always at hand. One of our team will readily pair on a screen-sharing call and get the job done.

For the Sec

✓ Allowlists live in Security Groups; least privilege egress policies FTW.

✓ Everything visible and settable from AWS console itself.

✓ IAM permissions on Security Groups themselves determine who can change them.

✓ TLS 1.2+, SSH v2 enforced and cannot be downgraded.

✓ Robust defence against DNS over HTTPS, SNI spoofing, Encrypted Client Hello (ESNI), etc.

✓ Updates on emerging threats and the CIS-hardened base image every quarter.

✓ Critical updates in 10 days.

✓ Data processing, with all the DNS and TLS metadata, is within customer-owned VPCs and not in another company's SaaS.

✓ Structured and filterable audit (allowlist change, etc.) logs in CloudWatch.

✓ Structured and filterable egress traffic logs in CloudWatch for full visibility.

For the Ops

✓ Terraform module preconfigured with high availability, load balancing and auto scaling.

✓ Preconfigured health checks at 2 consecutive failures, 5 seconds apart. (Most aggressive possible on AWS.)

✓ Terraform picks up upgrades automatically and applies with zero-downtime.

✓ No new providers or vendor UIs/dashboards; everything in AWS APIs.

✓ Works well on a t3.small and downscales automatically saving license costs.

✓ No need for another NAT solution, let alone a Managed NAT solution.

✓ No data processing fees.

✓ Same Elastic IP association support when allocated.

✓ No problem with round-robin and low-TTL DNS destinations in the allowlists.

✓ Structured and filterable flow logs in CloudWatch.

Next Steps

Now that you've discovered the simplest northbound firewall solution around,

Get a DemoExplore the Docs

Explore the Terraform module